World Annotator - Image captioning

While image classification is a well-understood problem, modern deep learning seq2seq models allow going one step further. We can go from simple image labels to full sentences describing complex visual scenes. Machine learning is getting increasingly chatty.

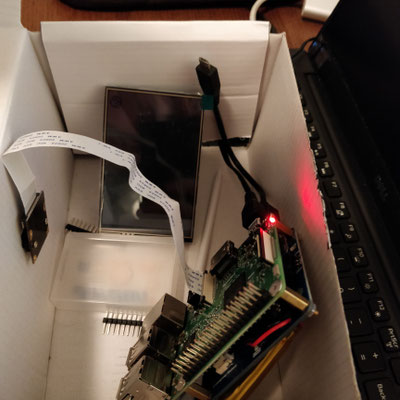

But what good is a lab setup describing stock photos on the internet, while we can go out on the streets and annotate image streams in real-time? That is the goal of the World Annotator project. I took a battery-powered raspberry pi, added a camera and LCD screen, and got myself a cute hand-held device to point at real world situations. Although it's possible to write a mobile app instead, building a dedicated custom device gives full control over the hardware, a full Linux platform, and allows gaining more experience with running computer vision and ML on embedded ARM devices.

More details to follow soon. (MXNet + TensorFlow setup, running models, video in the field.)