cvlab

I noticed I spent quite some time last year setting up computer vision prototypes, and playing with various CV deep learning models. To simplify experimentation and reduce setup costs, I decided to create a mini computer vision lab (cvlab), consisting of a bunch of hardware devices and a software playground library, leaning on popular CV libraries. The common setup will make it easier to try out new models and hardware, and I'm planning on extending the setup over time. Both desktops (Intel + NVIDIAGPU) and embedded systems (ARM, raspberry pi's) are supported.

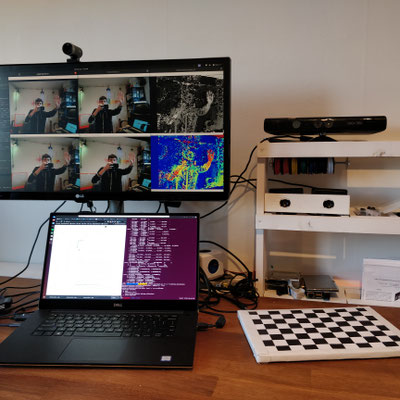

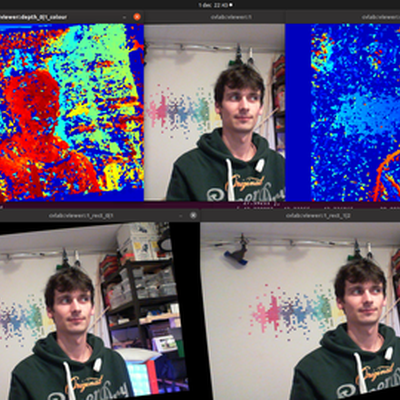

Hardware currently includes an array of webcams, including a fixed camera pair for multiview applications, a Kinect, raspberry pi's with cameras and mounted LCD screens, and ESP32-cam boards.

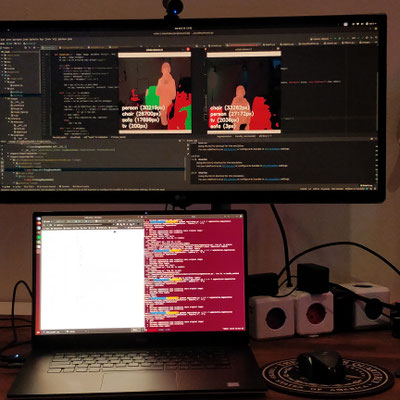

The software library can be found on github. It's using OpenCV, MXNet, Pillow, and of course scipy/numpy. It currently includes support for camera streams and videos, various classical algorithms like stereo vision and optical flow (blast to the past!), inference for deep learning models including applications like detection and segmentation, and a couple of utilities for handling things like images and UIs. Applications are tested on Linux on desktop and on a raspberry pi (v3+).

Ideas for extensions include adding support for more hardware devices (ROS for robotics applications, a driving robot platform, intel RealSense, ESP32-cam streams + on-board algorithms), interfaces to other neural network libraries (ONNX, PyTorch, Tensorflow), a personal model zoo, and ML lifecycle frameworks (mlflow, kubeflow?).

I'll add updates to this page about prototypes I'm working on. But I will also start using these common components for other, separate projects.